12. Sinergym with Google Cloud

In this project, we have defined some functionality based in gcloud API

python in sinergym/utils/gcloud.py. Our team aim to configure a Google

Cloud account and combine with Sinergym easily.

The main idea is to construct a virtual machine (VM) using Google Cloud Engine (GCE) in order to execute our Sinergym container on it. At the same time, this remote container will update Weights and Biases tracking server with artifacts if we configure that experiment with those options.

When an instance has finished its job, container auto-remove its host instance from Google Cloud Platform if experiments has been configured with this option.

Let’s see a detailed explanation above.

12.1. Preparing Google Cloud

12.1.1. First steps (configuration)

Firstly, it is necessary that you have a Google Cloud account set up and SDK configured (auth, invoicing, project ID, etc). If you don’t have this, it is recommended to check their documentation. Secondly, It is important to have installed Docker in order to be able to manage these containers in Google Cloud.

You can link gcloud with docker accounts using the next (see authentication methods):

$ gcloud auth configure-docker

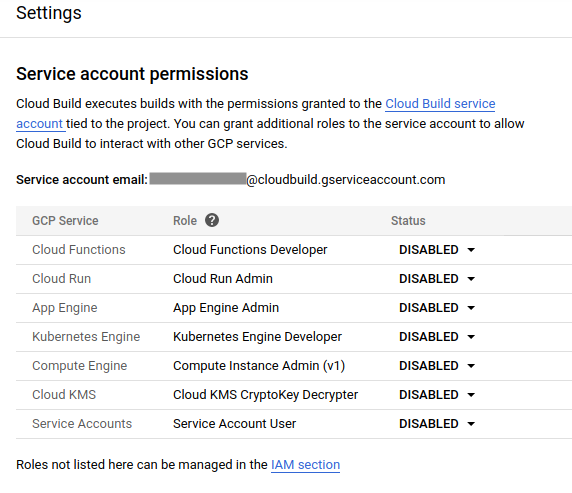

If you don’t want to have several problems in the future with the image build and Google Cloud functionality in general, we recommend you to allow permissions for google cloud build at the beginning (see this documentation).

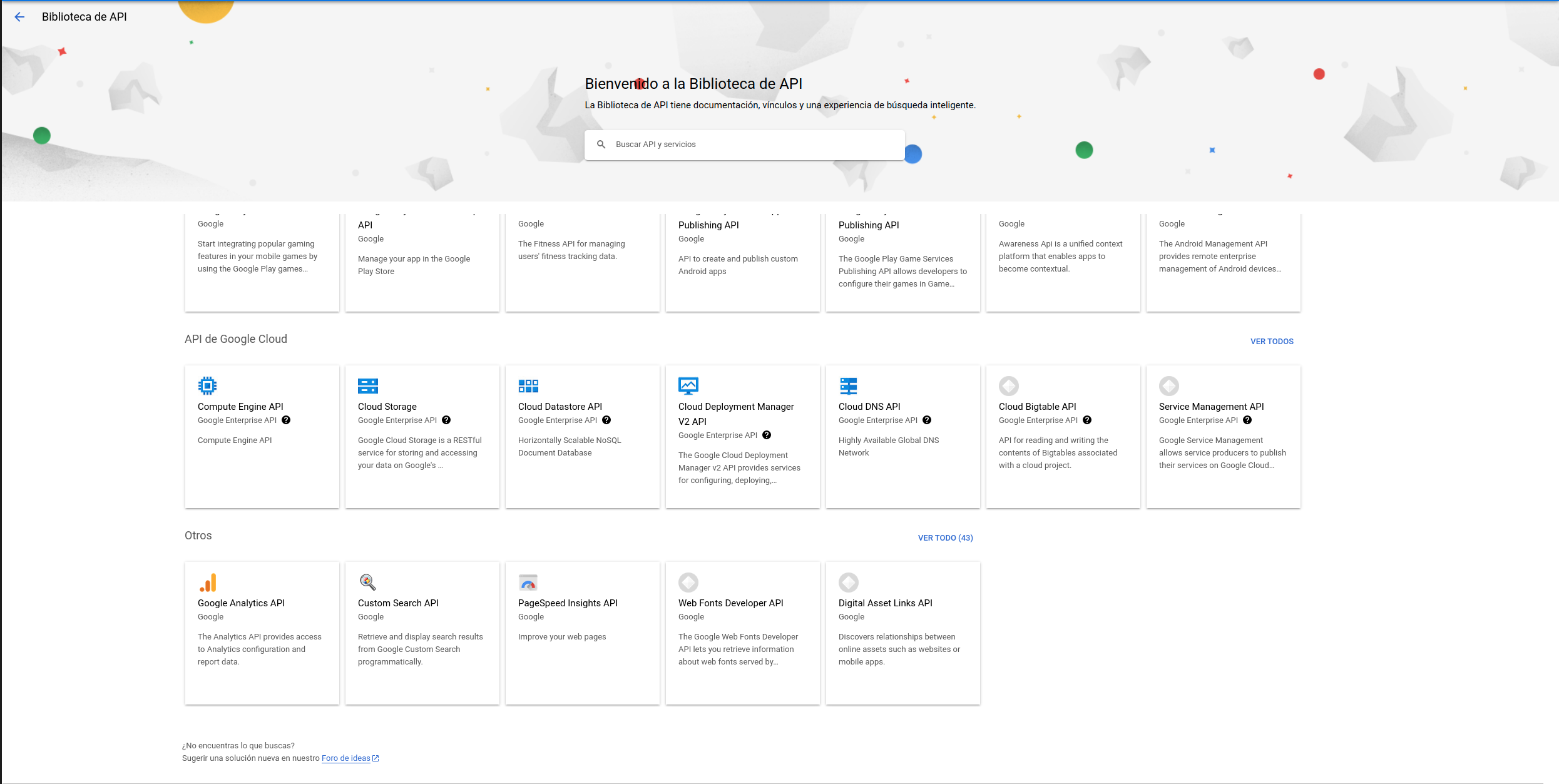

On the other hand, we are going to enable Google Cloud services in API library. These are API’s which we need currently:

Google Container Registry API.

Artifact Registry API

Cloud Run API

Compute Engine API

Cloud Logging API

Cloud Monitoring API

Cloud Functions API

Cloud Pub/Sub API

Cloud SQL Admin API

Cloud Firestore API

Cloud Datastore API

Service Usage API

Cloud storage

Gmail API

Hence, you will have to allow this services into your Google account. You can do it using gcloud client SDK:

$ gcloud services list

$ gcloud services enable artifactregistry.googleapis.com \

cloudapis.googleapis.com \

cloudbuild.googleapis.com \

containerregistry.googleapis.com \

gmail.googleapis.com \

sql-component.googleapis.com \

sqladmin.googleapis.com \

storage-component.googleapis.com \

storage.googleapis.com \

cloudfunctions.googleapis.com \

pubsub.googleapis.com \

run.googleapis.com \

serviceusage.googleapis.com \

drive.googleapis.com \

appengine.googleapis.com

Or you can use Google Cloud Platform Console:

If you have installed Sinergym and Sinergym extras. Google Cloud SDK must be linked with other python modules in order to some functionality works in the future. Please, execute the next in your terminal:

$ gcloud auth application-default login

12.1.2. Use our container in Google Cloud Platform

Our Sinergym container is uploaded in Container Registry as a public one currently. You can use it locally:

$ docker run -it eu.gcr.io/sinergym/sinergym:latest

If you want to use it in a GCE VM, you can execute the next:

$ gcloud compute instances create-with-container sinergym \

--container-image eu.gcr.io/sinergym/sinergym \

--zone europe-west1-b \

--container-privileged \

--container-restart-policy never \

--container-stdin \

--container-tty \

--boot-disk-size 20GB \

--boot-disk-type pd-ssd \

--machine-type n2-highcpu-8

We have available containers in Docker Hub too. Please, visit our repository

Note

It is possible to change parameters in order to set up your own VM with your preferences (see create-with-container).

Warning

--boot-disk-size is really important, by default

VM set 10GB and it isn’t enough at all for Sinergym container.

This derive in a silence error for Google Cloud Build

(and you would need to check logs, which incident is not clear).

12.1.3. Use your own container

Suppose you have this repository forked and you want to upload your own container on Google Cloud and to use it. You can use cloudbuild.yaml with our Dockerfile for this purpose:

steps:

# Write in cache for quick updates

- name: "eu.gcr.io/google.com/cloudsdktool/cloud-sdk"

entrypoint: "bash"

args: ["-c", "docker pull eu.gcr.io/${PROJECT_ID}/sinergym:latest || exit 0"]

# Build image (using cache if it's possible)

- name: "eu.gcr.io/google.com/cloudsdktool/cloud-sdk"

entrypoint: "docker"

args:

[

"build",

"-t",

"eu.gcr.io/${PROJECT_ID}/sinergym:latest",

"--cache-from",

"eu.gcr.io/${PROJECT_ID}/sinergym:latest",

"--build-arg",

"SINERGYM_EXTRAS=[DRL,gcloud]",

".",

]

# Push image built to container registry

- name: "eu.gcr.io/google.com/cloudsdktool/cloud-sdk"

entrypoint: "docker"

args: ["push", "eu.gcr.io/${PROJECT_ID}/sinergym:latest"]

# This container is going to be public (Change command in other case)

# - name: "gcr.io/cloud-builders/gsutil"

# args:

# [

# "iam",

# "ch",

# "AllUsers:objectViewer",

# "gs://artifacts.${PROJECT_ID}.appspot.com",

# ]

#Other options for execute build (not container)

options:

diskSizeGb: "10"

machineType: "E2_HIGHCPU_8"

timeout: 86400s

images: ["eu.gcr.io/${PROJECT_ID}/sinergym:latest"]

This file does the next:

Write in cache for quick updates (if a older container was uploaded already).

Build image (using cache if it’s available)

Push image built to Container Registry

Make container public inner Container Registry.

There is an option section at the end of the file. Do not confuse

this part with the virtual machine configuration. Google Cloud

uses a helper VM to build everything mentioned above. At the same

time, we are using this YAML file in order to upgrade our container

because of PROJECT_ID environment variable is defined by Google

Cloud SDK, so its value is your current project in Google Cloud

global configuration.

Warning

In the same way VM needs more memory, Google Cloud Build needs at least 10GB to work correctly. In other case it may fail.

Warning

If your local computer doesn’t have enough free space it might report the same error (there isn’t difference by Google cloud error manager), so be careful.

In order to execute cloudbuild.yaml, you have to do the next:

$ gcloud builds submit --region europe-west1 \

--config ./cloudbuild.yaml .

--substitutions can be used in order to configure build

parameters if they are needed.

Note

“.” in --config refers to Dockerfile, which is

necessary to build container image (see

build-config).

Note

In cloudbuild.yaml there is a variable named PROJECT_ID. However, it is not defined in substitutions. This is because it’s a predetermined variable by Google Cloud. When build begins “$PROJECT_ID” is set to current value in gcloud configuration (see substitutions-variables).

12.1.4. Create your VM or MIG

To create a VM that uses this container, here there is an example:

$ gcloud compute instances create-with-container sinergym \

--container-image eu.gcr.io/sinergym/sinergym \

--zone europe-west1-b \

--container-privileged \

--container-restart-policy never \

--container-stdin \

--container-tty \

--boot-disk-size 20GB \

--boot-disk-type pd-ssd \

--machine-type n2-highcpu-8

Note

--container-restart-policy never it’s really important for a

correct functionality.

Warning

If you decide enter in VM after create it immediately, it is possible container hasn’t been created yet. You can think that is an error, Google cloud should notify this. If this issue happens, you should wait for a several minutes.

To create a MIG, you need to create a machine set up template firstly, for example:

$ gcloud compute instance-templates create-with-container sinergym-template \

--container-image eu.gcr.io/sinergym/sinergym \

--container-privileged \

--service-account storage-account@sinergym.iam.gserviceaccount.com \

--scopes https://www.googleapis.com/auth/cloud-platform,https://www.googleapis.com/auth/devstorage.full_control \

--container-env=gce_zone=europe-west1-b,gce_project_id=sinergym \

--container-restart-policy never \

--container-stdin \

--container-tty \

--boot-disk-size 20GB \

--boot-disk-type pd-ssd \

--machine-type n2-highcpu-8

Note

--service-account, --scopes and --container-env parameters

will be explained in Containers permission to bucket storage output. Please, read that documentation before using these parameters,

since they require a previous configuration.

Then, you can create a group-instances as large as you want:

$ gcloud compute instance-groups managed create example-group \

--base-instance-name sinergym-vm \

--size 3 \

--template sinergym-template

Warning

It is possible that quote doesn’t let you have more than one VM at the same time. Hence, the rest of VM’s probably will be initializing always but never ready. If it is your case, we recommend you check your quotes here

12.1.5. Initiate your VM

Your virtual machine is ready! To connect you can use ssh (see gcloud-ssh):

$ gcloud compute ssh <machine-name>

Google Cloud use a Container-Optimized OS (see documentation) in VM. This SO have docker pre-installed with Sinergym container.

To use this container in our machine you only have to do:

$ docker attach <container-name-or-ID>

And now you can execute your own experiments in Google Cloud! For example, you can enter in remote container with gcloud ssh and execute DRL_battery.py for the experiment you want.

12.2. Executing experiments in remote containers

DRL_battery.py and load_agent.py will be allocated in every remote container and it is used to execute experiments and evaluations, being possible to combine with Google Cloud Bucket, Weights and Biases, auto-remove, etc:

Note

DRL_battery.py can be used in local experiments and send output data and artifact to remote storage such as wandb without configure cloud computing too.

The structure of the JSON to configure the experiment or evaluation is specified in How to use section.

Warning

For a correct auto_delete functionality, please, use MIG’s instead of individual instances.

12.2.1. Containers permission to bucket storage output

As you see in sinergym template explained in Create your VM or MIG,

it is specified --scope, --service-account and --container-env.

This aim to remote_store option in DRL_battery.py works correctly.

Those parameters provide each container with permissions to write in the bucket

and manage Google Cloud Platform (auto instance remove function).

Container environment variables indicate zone and project_id.

Hence, it is necessary to set up this service account and give privileges in order to that objective. Then, following Google authentication documentation we will do the next:

$ gcloud iam service-accounts create storage-account

$ gcloud projects add-iam-policy-binding PROJECT_ID --member="serviceAccount:storage-account@PROJECT_ID.iam.gserviceaccount.com" --role="roles/owner"

$ gcloud iam service-accounts keys create PROJECT_PATH/google-storage.json --iam-account=storage-account@PROJECT_ID.iam.gserviceaccount.com

$ export GOOGLE_CLOUD_CREDENTIALS= PROJECT_PATH/google-storage.json

In short, we create a new service account called storage-account. Then, we dote this account with roles/owner permission. The next step is create a file key (json) called google-storage.json in our project root (gitignore will ignore this file in remote). Finally, we export this file in GOOGLE_CLOUD_CREDENTIALS in our local computer in order to gcloud SDK knows that it has to use that token to authenticate.

12.2.2. Visualize remote wandb log in real-time

You only have to enter in Weights & Biases and log in with your GitHub account.

12.3. Google Cloud Alerts

Google Cloud Platform include functionality in order to trigger some events and generate alerts in consequence. Then, a trigger has been created in our gcloud project which aim to advertise when an experiment has finished. This alert can be captured in several ways (Slack, SMS, Email, etc). If you want to do the same, please, check Google Cloud Alerts documentation here.